Ich verfolge ja schon länger die Lobeshymnen von Usern über die Einrichtung von Tailscale auf Twitter, die der Tailscale CEO @apenwarr dort regelmässig retweetet:

My favourite CEO activity each month is combing through the feedback from happy @Tailscale users as we assemble our internal newsletter. In the spirit of the new year, here are some highlights from 2021!

— apenwarr (@apenwarr) December 31, 2021

Jetzt bin ich im Zuge meiner Recherchen zum Thema Overlay-/Mesh-Netzwerke endlich mal dazu gekommen, Tailscale selbst zu benutzen.

Und die Einrichtung stellt sich tatsächlich so simpel dar, wie die anderen, zufriedenen Kunden von Tailscale berichten.

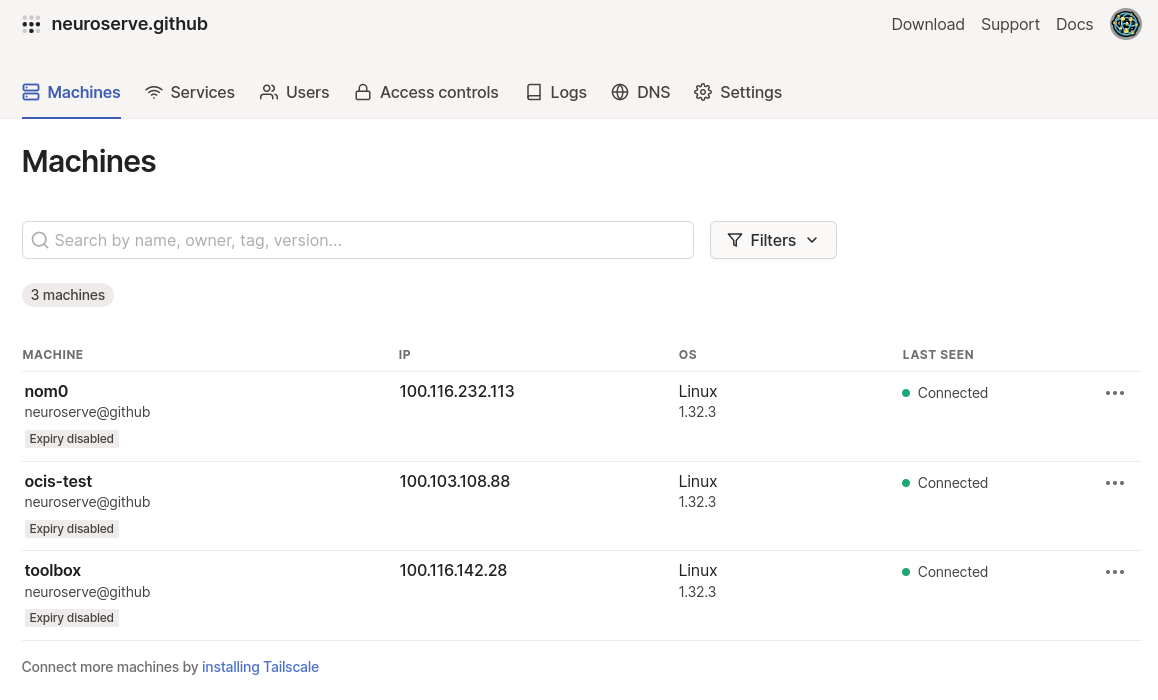

Die Installation für die Eiligen ist tatsächlich ein curl -fsSL https://tailscale.com/install.sh | sh für Linux, welches das Tailscale-Repo in der Paketverwaltung installiert und damit den Tailscale-Client installiert. tailscale up startet den Client und gibt eine URL aus, bei der man sich z. B. mit Github Kennung anmelden kann. Ist das geschehen, bekommt man eine Liste der Clients im Tailscale-VPN angezeigt:

Hier habe ich drei VMs aus drei verschiedenen Cloudumgebungen miteinander vernetzt. Um die NFS-Performance zwischen Pluscloud open und Pluscloud V zu testen, habe ich in der pco einen NFS-Server installiert und das freigegebene Filesystem auf der VM in der pcv gemountet. Der fio-Aufruf sieht wie folgt aus: fio --name=fiobench --directory="/mnt/bench" --ioengine=libaio --direct=1 --numjobs=2 --nrfiles=4 --runtime=30 --group_reporting --time_based --stonewall --size=8G --ramp_time=20 --bs=64k --rw=randread --iodepth=4 --fallocate=none --output=/opt/root/outputs/randread-4-64k

Der für "randwrite" entsprechend.

Randomread mit 64 kb Blockgröße:

fiobench: (g=0): rw=randread, bs=(R) 64.0KiB-64.0KiB, (W) 64.0KiB-64.0KiB, (T) 64.0KiB-64.0KiB, ioengine=libaio, iodepth=4

...

fio-3.28

Starting 2 processes

fiobench: (groupid=0, jobs=2): err= 0: pid=6337: Fri Nov 25 11:43:02 2022

read: IOPS=20, BW=1355KiB/s (1388kB/s)(40.0MiB/30225msec)

slat (nsec): min=7443, max=74238, avg=13110.89, stdev=6798.83

clat (msec): min=117, max=984, avg=379.32, stdev=133.67

lat (msec): min=117, max=984, avg=379.34, stdev=133.67

clat percentiles (msec):

| 1.00th=[ 153], 5.00th=[ 199], 10.00th=[ 232], 20.00th=[ 268],

| 30.00th=[ 305], 40.00th=[ 334], 50.00th=[ 359], 60.00th=[ 388],

| 70.00th=[ 422], 80.00th=[ 472], 90.00th=[ 550], 95.00th=[ 625],

| 99.00th=[ 844], 99.50th=[ 860], 99.90th=[ 986], 99.95th=[ 986],

| 99.99th=[ 986]

bw ( KiB/s): min= 640, max= 2048, per=99.47%, avg=1348.35, stdev=165.17, samples=120

iops : min= 10, max= 32, avg=21.07, stdev= 2.58, samples=120

lat (msec) : 250=13.88%, 500=71.77%, 750=13.09%, 1000=2.21%

cpu : usr=0.02%, sys=0.01%, ctx=661, majf=0, minf=116

IO depths : 1=0.0%, 2=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=634,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=4

Run status group 0 (all jobs):

READ: bw=1355KiB/s (1388kB/s), 1355KiB/s-1355KiB/s (1388kB/s-1388kB/s), io=40.0MiB (41.9MB), run=30225-30225msec

Randomwrite mit 64 kb Blockgröße:

fiobench: (g=0): rw=randwrite, bs=(R) 64.0KiB-64.0KiB, (W) 64.0KiB-64.0KiB, (T) 64.0KiB-64.0KiB, ioengine=libaio, iodepth=4

...

fio-3.28

Starting 2 processes

fiobench: (groupid=0, jobs=2): err= 0: pid=6350: Fri Nov 25 11:46:20 2022

write: IOPS=23, BW=1542KiB/s (1579kB/s)(45.6MiB/30299msec); 0 zone resets

slat (usec): min=6, max=418, avg=17.33, stdev=18.31

clat (msec): min=67, max=2262, avg=332.41, stdev=226.02

lat (msec): min=67, max=2262, avg=332.43, stdev=226.02

clat percentiles (msec):

| 1.00th=[ 153], 5.00th=[ 199], 10.00th=[ 215], 20.00th=[ 232],

| 30.00th=[ 247], 40.00th=[ 266], 50.00th=[ 279], 60.00th=[ 296],

| 70.00th=[ 317], 80.00th=[ 359], 90.00th=[ 447], 95.00th=[ 625],

| 99.00th=[ 1569], 99.50th=[ 2198], 99.90th=[ 2265], 99.95th=[ 2265],

| 99.99th=[ 2265]

bw ( KiB/s): min= 256, max= 3072, per=100.00%, avg=1607.40, stdev=292.91, samples=115

iops : min= 4, max= 48, avg=25.11, stdev= 4.58, samples=115

lat (msec) : 100=0.41%, 250=31.91%, 500=60.22%, 750=4.56%, 1000=1.52%

lat (msec) : 2000=1.66%, >=2000=0.55%

cpu : usr=0.02%, sys=0.01%, ctx=810, majf=0, minf=116

IO depths : 1=0.0%, 2=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,724,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=4

Run status group 0 (all jobs):

WRITE: bw=1542KiB/s (1579kB/s), 1542KiB/s-1542KiB/s (1579kB/s-1579kB/s), io=45.6MiB (47.8MB), run=30299-30299msec

Zum Vergleich dieselben Aufrufe auf einer VM, die ein Volume von unserem NetApp Storagesystem mountet (Randread 64 kb):

fiobench: (g=0): rw=randread, bs=64K-64K/64K-64K/64K-64K, ioengine=libaio, iodepth=4

fiobench: (g=0): rw=randread, bs=64K-64K/64K-64K/64K-64K, ioengine=libaio, iodepth=4

fio-2.0.13

Starting 2 processes

fiobench: (groupid=0, jobs=2): err= 0: pid=14578: Fri Nov 25 13:24:06 2022

read : io=4313.9MB, bw=147173KB/s, iops=2299 , runt= 30015msec

slat (usec): min=3 , max=304 , avg= 9.30, stdev= 8.03

clat (usec): min=287 , max=132836 , avg=3468.21, stdev=4469.22

lat (usec): min=315 , max=132844 , avg=3476.73, stdev=4469.33

clat percentiles (usec):

| 1.00th=[ 422], 5.00th=[ 700], 10.00th=[ 740], 20.00th=[ 780],

| 30.00th=[ 812], 40.00th=[ 852], 50.00th=[ 924], 60.00th=[ 1144],

| 70.00th=[ 3536], 80.00th=[ 7648], 90.00th=[10048], 95.00th=[11840],

| 99.00th=[17280], 99.50th=[20608], 99.90th=[32640], 99.95th=[37120],

| 99.99th=[53504]

bw (KB/s) : min= 3, max=91776, per=49.17%, avg=72372.18, stdev=12110.28

lat (usec) : 500=2.16%, 750=10.30%, 1000=43.10%

lat (msec) : 2=12.05%, 4=2.92%, 10=19.13%, 20=9.76%, 50=0.55%

lat (msec) : 100=0.02%, 250=0.01%

cpu : usr=0.75%, sys=2.29%, ctx=141554, majf=0, minf=174

IO depths : 1=0.1%, 2=0.1%, 4=168.1%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued : total=r=69016/w=0/d=0, short=r=0/w=0/d=0

Run status group 0 (all jobs):

READ: io=4313.9MB, aggrb=147173KB/s, minb=147173KB/s, maxb=147173KB/s, mint=30015msec, maxt=30015msec

Randwrite mit 64 kb Blockgröße:

fiobench: (g=0): rw=randwrite, bs=64K-64K/64K-64K/64K-64K, ioengine=libaio, iodepth=4

fiobench: (g=0): rw=randwrite, bs=64K-64K/64K-64K/64K-64K, ioengine=libaio, iodepth=4

fio-2.0.13

Starting 2 processes

fiobench: (groupid=0, jobs=2): err= 0: pid=14510: Fri Nov 25 13:22:42 2022

write: io=18234MB, bw=622349KB/s, iops=9724 , runt= 30001msec

slat (usec): min=3 , max=24783 , avg=20.16, stdev=42.46

clat (usec): min=278 , max=9296 , avg=800.13, stdev=369.79

lat (usec): min=411 , max=9335 , avg=821.63, stdev=369.53

clat percentiles (usec):

| 1.00th=[ 474], 5.00th=[ 524], 10.00th=[ 556], 20.00th=[ 604],

| 30.00th=[ 644], 40.00th=[ 676], 50.00th=[ 716], 60.00th=[ 756],

| 70.00th=[ 804], 80.00th=[ 884], 90.00th=[ 1064], 95.00th=[ 1384],

| 99.00th=[ 2416], 99.50th=[ 2864], 99.90th=[ 4128], 99.95th=[ 4896],

| 99.99th=[ 7328]

bw (KB/s) : min= 3, max=384512, per=49.25%, avg=306494.12, stdev=58407.53

lat (usec) : 500=2.80%, 750=55.85%, 1000=28.99%

lat (msec) : 2=10.42%, 4=1.82%, 10=0.12%

cpu : usr=3.54%, sys=10.90%, ctx=815964, majf=0, minf=46

IO depths : 1=0.1%, 2=0.1%, 4=167.7%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued : total=r=0/w=291730/d=0, short=r=0/w=0/d=0

Run status group 0 (all jobs):

WRITE: io=18234MB, aggrb=622349KB/s, minb=622349KB/s, maxb=622349KB/s, mint=30001msec, maxt=30001msec

Im Übrigen möchte ich von jemandem, der sich "System Engineer" nennt, nicht mehr hören, dass etwas "Scheiße performt". Allenfalls möchte ich hören wie "Scheiße" etwas performt. Fio bietet sich an, dafür Zahlen zu beschaffen.

Zusätzliche Hinweise können noch Tests mit iperf3 geben. Zunächst habe ich die VM in der Pluscloud open als "Server" konfiguriert und jeweils von den VMs aus der Pluscloud V und aus der Triton-Umgebung getestet. Danach habe ich die "Server"-Rolle jeweils einmal auf die anderen VMs übertragen und von den jeweils verbleibenden VMs getestet:

Triton -> pco (stage1)

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-60.00 sec 183 MBytes 25.6 Mbits/sec 423 sender

[ 5] 0.00-60.02 sec 183 MBytes 25.6 Mbits/sec receiver

Triton -> pco (prod1)

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-60.00 sec 193 MBytes 27.0 Mbits/sec 644 sender

[ 5] 0.00-60.02 sec 193 MBytes 26.9 Mbits/sec receiver

pco (stage1) -> Triton

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-60.00 sec 111 MBytes 15.5 Mbits/sec 491 sender

[ 5] 0.00-60.02 sec 111 MBytes 15.5 Mbits/sec receiver

pco (prod1) -> Triton

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-60.00 sec 140 MBytes 19.6 Mbits/sec 480 sender

[ 5] 0.00-60.04 sec 140 MBytes 19.6 Mbits/sec receiver

pco (stage1) -> pcv

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-60.00 sec 82.4 MBytes 11.5 Mbits/sec 280 sender

[ 5] 0.00-60.02 sec 82.1 MBytes 11.5 Mbits/sec receiver

pco (prod1) -> pcv

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-60.00 sec 114 MBytes 15.9 Mbits/sec 410 sender

[ 5] 0.00-60.04 sec 113 MBytes 15.8 Mbits/sec receiver

pcv -> pco (stage1)

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-60.00 sec 93.5 MBytes 13.1 Mbits/sec 412 sender

[ 5] 0.00-60.04 sec 93.3 MBytes 13.0 Mbits/sec receiver

pcv -> pco (prod1)

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-60.00 sec 79.1 MBytes 11.1 Mbits/sec 270 sender

[ 5] 0.00-60.04 sec 79.0 MBytes 11.0 Mbits/sec receiver

Triton -> pcv

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-60.00 sec 80.6 MBytes 11.3 Mbits/sec 363 sender

[ 5] 0.00-60.03 sec 80.4 MBytes 11.2 Mbits/sec receiver

pcv -> Triton

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-60.00 sec 74.0 MBytes 10.3 Mbits/sec 342 sender

[ 5] 0.00-60.05 sec 73.9 MBytes 10.3 Mbits/sec receiver